Sensorimotor Prosthetics

We work with stakeholders to create fit-for-purpose prostheses.

Acquiring a new skill, for example, learning to use chopsticks, requires accurate motor commands to be sent from the brain to the hand, and reliable sensory feedback from the hand to the brain. Over time and with training, the brain learns to handle this two-way communication flexibly and efficiently. Inspired by this sensorimotor interplay, our research is guided by a conviction that progress in prosthetic limb control is best achieved through a strong synergy of motor learning and sensory feedback.

We, therefore, study the interaction of neural and behavioural processes that control the hand movements to ultimately innovate prosthetic control solutions that users would find fit for purpose.

- Video: Multi-Grip Classification-Based Prosthesis Control With Two EMG-IMU Sensors

- Multi-Grip Classification-Based Prosthesis Control With Two EMG-IMU Sensors

Specifically, we are developing

- novel methods and technologies enable the utilisation of the flexibility of the brain in learning new skills for closed-loop prosthesis control;

- efficient artificial intelligence algorithms for processing of multi-modal data collected with hybrid sensors;

- effective systems and stimulation paradigms to restore sensory feedback in prosthetic control;

- a data-driven care model that enhances the experience of receiving a prosthesis.

Our work on sensorimotor prosthetics builds on a long-term interaction between Dr Nazarpour, Prof Vijayakumar and Dr Roche.

Active Topics

We have worked on several classes of upper limb prosthetics controllers to design most intuitive control interfaces. Moreover, we have developed new ways of combining multi-modal bio-signals to improve intuitive control of prosthetics. The main focus has been to reduce cognitive load and improve robustness of operations.

-

Discrete action control for prosthetic digits BioRxiv, 2020 PDF

-

Multi-grip classification-based prosthesis control with two EMG-IMU sensors, IEEE Trans Neural Sys Rehab Eng, 28(2):508-518, 2020. PDF

-

Improved prosthetic hand control with concurrent use of myoelectric and inertial measurements, J NeuroEng Rehab, 14:71, 2017. PDF

When controlling a prosthesis, the patterns of neural and/or muscular activity can differ from those used to control the biological limbs. We explore the extent to which this activity can deviate from natural patterns employed in controlling the movement of the biological arm and hand. We will therefore examine whether prosthesis users can learn to synthesise new functional maps between muscles and prosthetic digits; for instance, in the case of a partial hand amputation, whether users can grasp an object by contracting a small group of muscles that do not naturally control the grasp. We have named this approach Abstract Decoding. In this definition, the user learns to generate functional muscle activity patterns. This notion is completely in contrast to the pattern recognition or regression approaches in which the prosthesis learns to identify movement intent(s) by decoding the EMG patterns without considering the users’ learning capability.

If you are interested in learning more about abstract decoding, please see the following papers or contact Dr Kia Nazarpour.

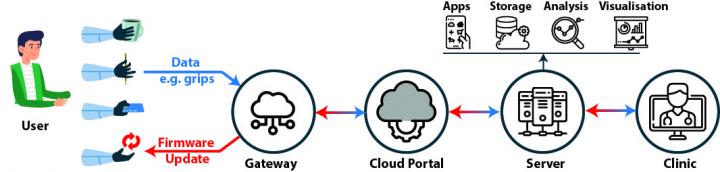

In current clinical practice, a prosthesis is fitted in the clinic. Once the user is home, the clinician “cannot” tell if the prosthesis is used or how well it is functioning. Statistics show that up to 44% of the users abandon their prosthesis [Salminger et al. Disability & Rehab, 2020].

We aim to co-create the world’s first Internet-enabled prosthetic hand; connecting the user and the clinic seamlessly. Secure data flow and artificial intelligence (AI) sit at the heart of this bidirectional communication link. The opposite figure illustrates our vision.

If you are interested in joining this initiative, please contact Dr Kia Nazarpour.

-

Arduino-based myoelectric control: Towards longitudinal study of prosthesis use, Sensors 21(3):763, 2021 PDF

Key Collaborators

| Matthew Dyson | Newcastle University |

| Sarah Day | University of Strathclyde |

Bionics+: User-Centred Design and Usability of Bionic Devices

EPSRC (2021-2025)

A smart electrode housing to improve the control of upper limb myoelectric prostheses

NIHR (2021-2024)

Sensorimotor learning for control of prosthetic limbs

EPSRC (2018-2023)

How fast Is too fast? Boundaries to the perception of electrical stimulation of peripheral nerves

IEEE Transactions on Neural Systems and Rehabilitation Engineering 30:782-788, 2022

Spatio-temporal warping for myoelectric control: an offline, feasibility study

Journal of Neural Engineering 18:066028, 2021

A more human prosthetic hand

Science Robotics 5(46):eabd9341, 2020

Learning, generalization, and scalability of abstract myoelectric control

IEEE Transactions on Neural Systems and Rehabilitation Engineering 28(7):1539-1547, 2020

Multi-grip classification-based prosthesis control with two EMG-IMU sensors

IEEE Transactions on Neural Systems and Rehabilitation Engineering 28(3):508-518, 2020

Long-term implant of intramuscular sensors and nerve transfers for wireless control of robotic arms in above-elbow amputees

Science Robotics 4(32):eaaw6306, 2019

Electrophysiology

-

Blackrock Microsystems

-

A-M Systems

-

Delsys

-

Digitimer

Prosthetic Hands

-

COVVI ltd

-

Prensilia s.r.l.

-

Össur

Other

-

Turntable

-

Cyberglove

-

Setups for Outcome Measurement

- SHAP

- Box and Blocks